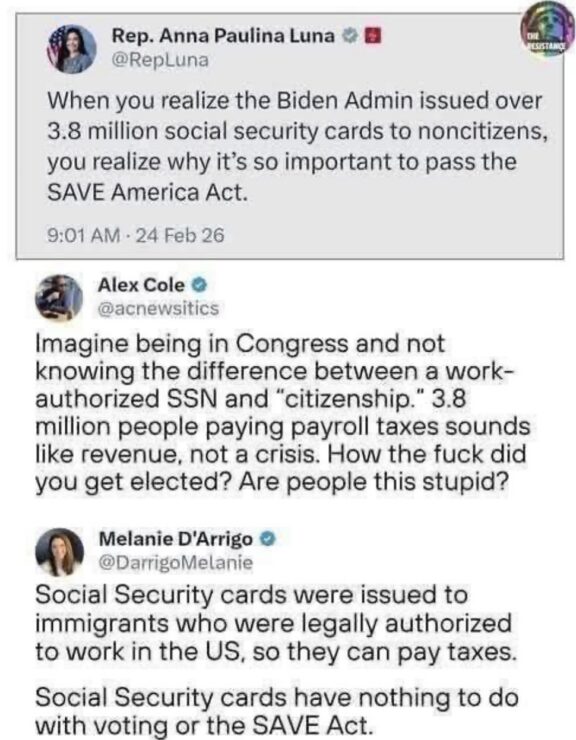

Fact-checking is good. Name-calling, not so much.

Calling people who disagree with you stupid is one of the worst things you can do. Please don’t.

One of the biggest reasons MAGA types support Trump so strongly is that they resent liberals who look down on them.

Don’t make it worse.

If you really want to make a positive difference, share a polite fact check with a MAGA friend you think might be switchable. There are more than you might think, and flipping just a few would make a giant difference.

Found on Facebook: